These eye-opening AI misuse statistics will present you the way not all purposes of AI are optimistic.

Synthetic intelligence is now a key a part of our every day lives. From medical analysis to scholar studying instruments, it’s already streamlining industries throughout the board. Let’s check out a few of its downfalls.

Most Surprising AI Misuse Statistics

Whether or not it’s dishonest, infringing on copyright, meddling in politics, or the worst form of deepfakes – the dimensions and breadth of AI misuse will shock you:

1 out of 10 scholar assignments contained AI-generated content material in 2023.

84% of staff might have uncovered firm knowledge by utilizing AI.

90% of artists consider Copyright legislation is missing for AI.

78% of individuals open AI-written phishing emails.

Phishing emails have risen 1,256% since ChatGPT launched.

75% fear deepfakes may affect elections.

Searches for NSFW deepfakes have elevated 5,800% since 2019.

4 million folks a month use deepfake ‘Nudify’ apps.

Pupil Misuse of AI Statistics

AI in training has many respectable purposes, however it might be silly to suppose college students aren’t utilizing it to do the work for them.

1. 1 out of 10 scholar assignments contained AI-generated content material in 2023.

(Supply: Turnitin)

Within the yr because the education-focused Turnitin plagiarism checker launched its AI detector, roughly 1 in 10 higher-education assignments contained content material generated by AI instruments like ChatGPT.

Moreover, out of the 200 million papers analyzed, greater than 6 million have been at the very least 80 % AI-generated.

2. Virtually 50% of scholars surveyed admitted to utilizing AI in some kind for research.

(Supply: Tyton Companions)

One 2023 paper discovered that just about half of scholars have been utilizing AI and 12% every day.

It’s much more worrying that 75% say they’ll proceed to make use of AI even when establishments ban it.

3. College students and school are cut up 50/50 on the professionals and cons of AI.

(Supply: Tyton Companions)

The identical analysis discovered that roughly half of scholars and school felt AI would have a optimistic or unfavourable affect on training throughout Spring. A definitive cut up on the professionals and cons.

Curiously, by Fall, 61% of school have been now in favor of integrating AI, whereas 39% nonetheless thought of it a unfavourable. This demonstrates a gradual change in opinion.

4. 68% of center college and highschool lecturers have used AI detection instruments.

(Supply: Heart for Democracy & Expertise)

In a 2024 paper, the vast majority of school-level lecturers stated they’d used AI detection, an increase from the earlier yr.

Furthermore, practically two-thirds have reported college students dealing with penalties for allegedly utilizing generative AI of their assignments. That is up from 48% within the 2022-2023 college yr.

It appears AI is now absolutely embedded in center, highschool, and better training.

AI Misuse Statistics on the Job

AI is rising in all industries. College students aren’t the one ones reducing corners. The speed of staff who concern AI or use it in dangerous methods is fascinating.

5. Greater than half of staff use AI weekly.

(Supply: Oliver Wyman Discussion board)

Based mostly on a research of greater than 15,000 staff throughout 16 nations, over 50% say they use AI on a weekly foundation for work.

6. 84% of staff might have uncovered firm knowledge by utilizing AI.

(Supply: Oliver Wyman Discussion board)

From the identical research, 84% of those that use AI admit that this might have uncovered their firm’s proprietary knowledge. This poses new dangers in the case of knowledge safety.

7. Regardless of issues, 41% of workers surveyed would use AI inside finance.

(Supply: Oliver Wyman Discussion board, CNN World)

Threat additionally extends to funds. Though 61% of workers surveyed are involved in regards to the trustworthiness of AI outcomes, 40% of these would nonetheless use it to make “massive monetary choices.”

30% would even share extra private knowledge if that meant higher outcomes.

Maybe not so clever, after one finance employee in Hong Kong was duped into transferring $25 million. Cybercriminals deep-faked the corporate’s chief monetary officer in a video convention name.

8. 37% of staff have seen inaccurate AI outcomes.

(Supply: Oliver Wyman Discussion board)

Considerations about trustworthiness are warranted, as practically 40% of US workers say they’ve seen “errors made by AI whereas it has been used at work.” Maybe a much bigger concern is what number of workers have acted on false data.

Curiously, the best proportion of workers recognizing inaccurate AI data is 55% in India. That is adopted by 54% in Singapore and 53% in China.

On the opposite finish of the dimensions, 31% of German workers have seen errors.

9. 69% of workers concern private knowledge misuse.

(Supply: Forrester Consulting by way of Workday)

It’s not simply employers that face potential threat from staff. A 2023 research commissioned by Workday suggests two-thirds of workers are nervous that utilizing AI within the office may put their very own knowledge in danger.

10. 62% of Individuals concern AI utilization in hiring choices.

(Supply: Ipos Shopper Tracker, ISE, Workable)

Earlier than workers even make it into the workforce, AI in hiring choices is a rising concern.

In accordance with Ipos, 62% of Individuals consider AI shall be used to resolve on successful job candidates.

ISE helps this concern, because it discovered 28% of employers depend on AI within the hiring course of.

Furthermore, a survey of three,211 professionals in 2023 got here to the same determine, with 950 (29.5%) admitting to using AI in recruitment.

11. Individuals are most involved about AI in legislation enforcement.

(Supply: Ipos Shopper Tracker)

67% of polled Individuals concern AI shall be misused in police and legislation enforcement. That is adopted by the concern of AI hiring and “too little federal oversight within the utility of AI” (59%).

Copyright AI Misuse Stats

If AI can solely depend on current data, resembling artwork types or video content material, the place does that depart the unique creator and copyright holder?

12. 90% of artists consider copyright legislation is missing for AI.

(Supply: E-book and Artist)

In a 2023 survey, 9 out of 10 artists say copyright legal guidelines are outdated in the case of AI.

Moreover, 74.3% suppose scraping web content material for AI studying is unethical.

With 32.5% of their annual revenue coming from artwork gross sales and providers, 54.6% are involved that AI will affect their revenue.

13. An ongoing class motion lawsuit alleges copyright infringement in opposition to artists.

(Supply: Knowledge Privateness and Safety Insider)

Visible artists Sarah Andersen, Kelly McKernan, and Karla Ortiz, are in an ongoing authorized battle in opposition to Midjourney, Steady Diffusion, amongst others.

A choose has dominated that AI fashions plausibly perform in a means that infringes on copyrighted materials, and the plaintiffs’ declare can proceed.

14. Getty Pictures claims Stability AI illegally copied 12 million photographs.

(Supply: Reuters)

From particular person artists to pictures giants, Getty Pictures can be after Steady Diffusion.

In 2023, the inventory photograph supplier filed a lawsuit alleging Stability AI copied 12 million of its images to coach its generative AI mannequin.

Getty licenses its images for a price, which the greater than billion-dollar AI large by no means paid for.

The end result of such circumstances may produce huge modifications to the best way AI artwork and photograph mills function.

15. A ten-step Copyright Greatest Practices has been proposed.

(Supply: Houston Regulation Overview)

To sort out the problem of AI copyright infringement, Matthew Sag has proposed a set of 10 greatest practices.

These embrace programming fashions to be taught abstractions relatively than particular particulars and filtering content material that’s too much like current works. Furthermore, data of coaching knowledge that contain copyrighted materials needs to be saved.

Scamming and Felony AI Misuse Stats

From catfishing to ransom calls, scammers and criminals misuse AI in more and more scary methods. These stats and information paint a way forward for ever-increasing felony sophistication:

16. 25% of individuals have skilled AI voice cloning scams.

(Supply: McAfee)

Cybercriminals can use AI to clone the voices of individuals after which use them in telephone scams. In a survey of seven,000 folks in 2023, one in 4 say they’ve skilled a voice rip-off firsthand or know others who’ve.

Extra worrying, 70% of these surveyed say they aren’t assured if they’ll distinguish the distinction.

17. AI voice cloning scams steal between $500 and $15,000.

(Supply: McAfee)

77% of these victims misplaced cash and profitable AI voice cloning scams aren’t going after small change.

The survey notes that 36% of targets misplaced between $500 and $3,000. Some 7% have been conned out of between $5,000 and $15,000.

18. 61% of cybersecurity leaders are involved about AI-drafted phishing emails.

(Supply: Egress)

It’s not simply trendy and elaborate voice cloning that criminals are misusing. Conventional phishing emails are additionally an growing concern.

In 2024, 61% of cybersecurity leaders say using chatbots in phishing retains them awake at night time. This is perhaps as a result of AI is correct and quick.

Moreover, 52% suppose AI could possibly be useful in provide chain compromises, and 47% fear it could help in account takeovers.

19. AI can assist scammers be extra convincing.

(Supply: Which)

We’ve all seen the stereotypical e-mail rip-off of a rich prince providing a small fortune when you simply ship some cash to assist them unblock a bigger switch. Replete with grammatical errors and inconsistencies, these scams at the moment are sometimes buried within the spam folder.

Nonetheless, AI chatbots are able to cleansing up non-English rip-off messages to be extra convincing.

Regardless of it being in opposition to their phrases, Which was capable of produce legitimate-looking messages posing as PayPal and supply providers utilizing ChatGPT and Bard.

20. 78% of individuals open AI-written phishing emails.

(Supply: Sosafe)

To reveal additional the effectiveness of AI in producing convincing scams, one research discovered that recipients opened practically 80% of AI-written phishing emails.

Whereas the research really discovered comparable outcomes from human-written scams, interplay charges have been typically larger for the AI-generated emails.

Practically two-thirds of people have been deceived into divulging personal particulars in on-line varieties after clicking malicious hyperlinks.

21. Creating phishing emails is 40% faster with AI.

(Supply: Sosafe)

Analysis additionally means that in addition to making scams look extra convincing, the facility of AI can produce them sooner.

Phishing emails are made 40% sooner utilizing AI, which means within the numbers recreation, cybercriminals shall be extra profitable and may scale up their operations.

22. Phishing emails have risen 1,256% since ChatGPT was launched.

(Supply: SlashNext)

Whereas correlation doesn’t essentially imply causation, AI and phishing go hand-in-hand. Since ChatGPT launched, there was a staggering 1,265% enhance within the variety of phishing emails despatched.

23. Cybercriminals use their very own AI instruments like WormGPT and FraudGPT.

(Supply: SlashNext – WormGPT, KrebOnSecurity)

Public AI instruments like ChatGPT have their place within the cybercrime world. Nonetheless, to skirt restrictions, criminals have developed their very own instruments like WormGPT and FraudGPT.

Proof suggests WormGPT is implicated in Enterprise Electronic mail Compromise Assaults (BECs). It may possibly additionally write malicious code for malware.

WormGPT sells entry to its platform by way of a channel on the encrypted Telegram messaging app.

24. Greater than 200 AI hacking providers can be found on the Darkish Net.

(Supply: Indiana College by way of the WSJ)

Many different malicious massive language fashions exist. Analysis from Indiana College found greater than 200 providers of this type on the market and free on the darkish internet.

The primary of its sort emerged simply months after ChatGPT itself went stay in 2022.

One widespread hack known as immediate injection, which bypasses the restrictions of widespread AI chatbots.

25. AI has detected hundreds of malicious AI emails.

(Supply: WSJ)

In some ironic excellent news, companies like Irregular Safety are utilizing AI to detect which malicious emails are AI-generated. It claims to have detected hundreds since 2023 and blocked twice as many personalised e-mail assaults in the identical interval.

26. 39% of Indians discovered on-line relationship matches have been scammers.

(Supply: McAfee – India)

Cybercriminals use romance as one supply for locating victims and up to date analysis in India revealed practically 40% of on-line relationship interactions concerned scammers.

From common faux profile pics to AI-generated images and messages, the research discovered love scammers to be rife on relationship and social media apps.

27. 77% of surveyed Indians consider they’ve interacted with AI-generated profiles.

(Supply: McAfee – India)

The research of seven,000 folks revealed that 77% of Indians have come throughout faux AI relationship profiles and images.

Moreover, of those that responded to potential love pursuits, 26% found them to be some type of AI bot.

Nonetheless, analysis suggests a tradition that may even be embracing the artwork of catfishing with AI.

28. Over 80% of Indians consider AI-generated content material garners higher responses.

(Supply: McAfee – India, Oliver Wyman Discussion board)

The identical analysis discovered that many Indians are utilizing AI to spice up their very own desirability within the on-line relationship realm, however not essentially for scamming.

65% of Indians have used generative AI to create or increase images and messages on a relationship app.

And it’s working. 81% say AI-generated messages elicit extra engagement than their very own pure messages.

In truth, 56% had deliberate to make use of chatbots to craft their lovers’ higher messages on Valentine’s Day in 2024.

Certainly, different analysis suggests 28% of individuals consider AI can seize the depth of actual human emotion.

Nonetheless, Indians take observe: 60% stated in the event that they acquired an AI message from a Valentine’s lover, they’d really feel harm and offended.

29. One ‘Tinder Swindler’ scammed roughly $10 million from his feminine victims.

(Supply: Fortune)

One of the high-profile relationship scammers was Shimon Hayut. Beneath the alias Simon Leviev, he scammed girls out of $10 million utilizing apps, doctored media, and different trickery.

But after that includes within the widespread Netflix documentary the Tinder Swindler, the tables have turned. He was conned out of $7,000 on social media in 2022.

Reviews state somebody posed as a pair on Instagram with ties to Meta. They managed to get him to switch cash by way of good previous PayPal.

AI Faux Information and Deepfakes

Faux information and misinformation on-line are nothing new. Nonetheless, with the facility of AI, it’s turning into simpler to unfold and tough to tell apart between actual, false, and deepfake content material.

30. Deepfakes elevated 10 occasions in 2023.

(Supply: Sumsub)

As AI expertise burst into the mainstream, so did the rise of deepfakes. Knowledge suggests the variety of deepfakes detected rose 10 occasions in 2023 and is simply growing this yr.

Based mostly on industries, cryptocurrency-related deepfakes made up 88% of all detections.

Based mostly on areas, North America skilled a 1,740% enhance in deepfake fraud.

31. AI-generated faux information posts elevated by 1,000% in a single month.

(Supply: NewsGuard by way of the Washington Submit)

In simply Could 2023, the variety of faux news-style articles elevated by 1,000% based on reality checker NewsGuard.

The analysis additionally discovered that AI-powered misinformation web sites skyrocketed from 49 to 600 in the identical interval, primarily based on their standards.

Whether or not it’s financially or politically motivated, AI is now on the forefront of faux tales, extensively shared on social media.

32. Canada is essentially the most nervous about AI faux information.

(Supply: Supply: Ipos International Advisor)

In a survey of 21,816 residents in 29 nations, 65% of Canadians have been nervous that AI would make faux information worse.

Individuals have been barely much less nervous at 56%. One motive given is a lower within the variety of native information retailers in Canada, leaving folks to show to lesser-known sources of knowledge.

33. 74% suppose AI will make it simpler to generate practical faux information and pictures.

(Supply: Supply: Ipos International Advisor)

Of all residents surveyed throughout every nation, 74% felt AI is making it tougher to tell apart actual from faux information and pictures from actual ones.

89% of Indonesians felt the strongest on the problem, whereas Germans (64%) have been the least involved.

34. 56% of individuals can’t inform if a picture is actual or AI-generated.

(Supply: Oliver Wyman Discussion board)

Analysis suggests greater than half of individuals can’t distinguish faux AI-generated photographs and actual ones.

35. An AI picture was in Fb’s prime 20 most-viewed posts in Q3 2023.

(Supply: Misinfo Overview)

Whether or not we consider them or not, AI imagery is in every single place. Within the third quarter of 2023, one AI picture garnered 40 million views and over 1.9 million engagements. That put it within the prime 20 of most considered posts for the interval.

36. A mean of 146,681 folks observe 125 AI image-heavy Fb pages.

(Supply: Misinfo Overview)

A mean of 146, 681 folks adopted 125 Fb pages that posted at the very least 50 AI-generated photographs every throughout Q3, 2023.

This wasn’t simply innocent artwork, as researchers categorised many as spammers, scammers, and engagement farms.

Altogether, the pictures have been considered a whole lot of hundreds of thousands of occasions. And that is solely a small subset of such pages on Fb.

37. 60% of shoppers have seen a deepfake up to now yr.

(Supply: Jumio)

In accordance with a survey of over 8,000 grownup shoppers, 60% encountered deepfake content material up to now yr. Whereas 22% have been uncertain, solely 15% stated they’ve by no means seen a deepfake.

In fact, relying on the standard, one might not even know in the event that they’ve seen it.

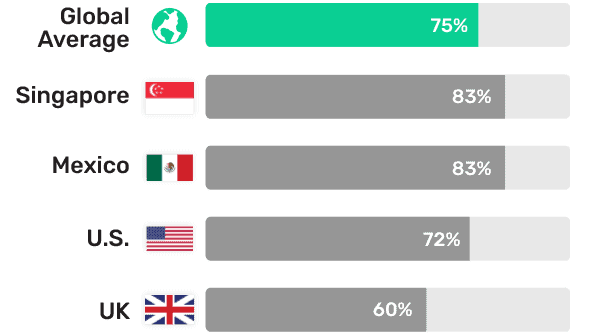

38. 75% fear deepfakes may affect elections.

(Supply: Jumio)

In america, 72% of respondents feared AI deepfakes may affect upcoming elections.

The largest fear comes from Singapore and Mexico (83% respectively), whereas the UK is much less nervous about election interference with 60%.

Regardless of this, UK respondents felt the least able to recognizing a deepfake of politicians (33%).

Singapore was essentially the most assured at 60%, which can recommend concern correlates with particular person consciousness of deepfakes.

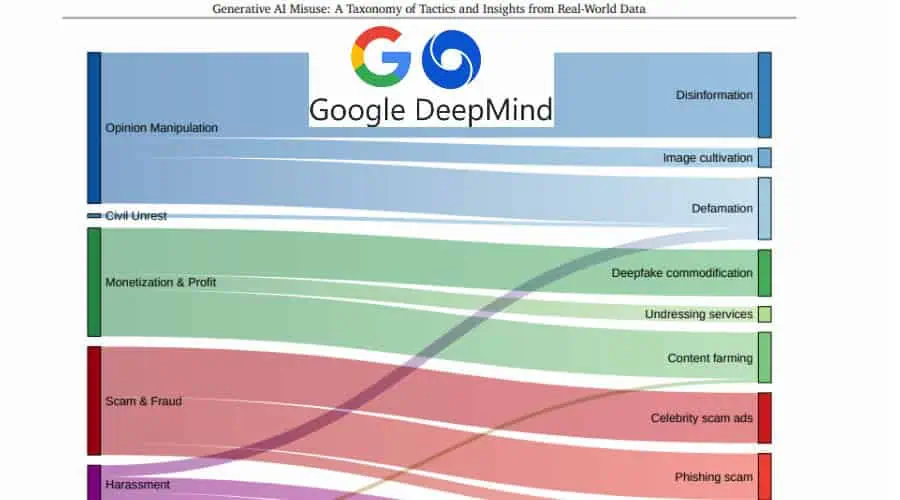

39. Political deepfakes are the most typical.

(Supply: DeepMind)

The commonest misuse of AI deepfakes is within the political sphere based on analysis from DeepMind and Google’s Jigsaw.

Extra exactly, 27% of all reported circumstances tried to distort the general public’s notion of political realities.

The highest 3 methods are disinformation, cultivating a picture, and defamation.

Aside from opinion manipulation, monetization & revenue, then rip-off & fraud, have been the second and third commonest objectives of deepfakes.

40. Simply 38% of scholars have realized spot AI content material.

(Supply: Heart for Democracy & Expertise)

Schooling is one technique to sort out the affect of deepfakes, however faculties could also be failing. Simply 38% of UK college college students say they’ve been taught spot AI-generated photographs, textual content, and movies.

In the meantime, 71% of scholars themselves expressed a want for steerage from their educators.

Grownup, Felony, and Inappropriate AI Content material

The grownup business has all the time been on the forefront of expertise and AI is simply the following step. However what occurs when customers pay for content material, they suppose is actual? Or worse, folks deepfake others with out consent?

41. Over 143,000 NSFW deepfake movies have been uploaded in 2023.

(Supply: Channel 4 Information by way of The Guardian)

Crude faux photographs of celebrities superimposed onto grownup stars have been round for many years, however AI has elevated their high quality and recognition, in addition to introducing movies.

As AI expertise quickly developed in 2022/2023, 143,733 new deepfake movies appeared on the net throughout Q1-3 of 2024.

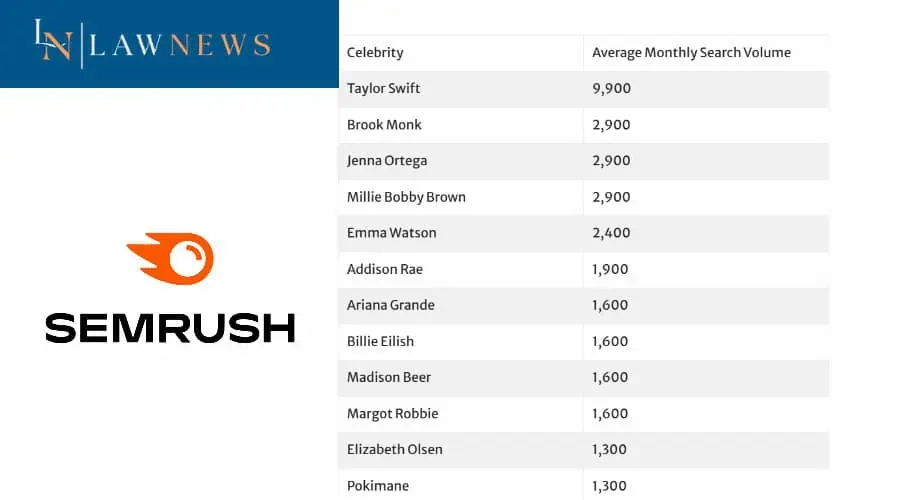

42. Searches for NSFW deepfakes have elevated by 5,800% since 2019.

(Supply: SEMRush by way of Regulation Information)

An instance of the rise of grownup deepfakes could be discovered by monitoring search engine quantity. In accordance with an evaluation utilizing SEMRush, the time period on this context has elevated 58 occasions since 2019.

43. 4,000 celebrities have been victims of NSFW deepfakes.

(Supply: Channel 4 Information by way of The Guardian)

Analysis on the most well-liked grownup deepfake web sites discovered the likeness of roughly 4,000 celebrities generated into express photographs and movies.

5 websites acquired over 100 million views over a three-month interval in 2023.

Many nations at the moment are proposing legal guidelines to make such content material unlawful.

44. 96% of deepfake imagery is grownup content material.

(Supply: Deeptrace)

In as early as 2019, 96% of deepfakes on-line have been grownup in nature. 100% have been girls (principally celebrities) who hadn’t given consent.

On the opposite finish of the dimensions, on YouTube the place nudity is prohibited, 61% of deepfakes have been male and “commentary-based.”

45. Taylor Swift deepfakes went viral in 2024 with over 27 million views.

(Supply: NBC Information)

In January 2024, Taylor Swift turned essentially the most considered topic of deepfakes when NSFW AI photographs and movies unfold on-line, totally on Twitter/X.

In 19 hours, the first content material acquired over 260,000 likes, earlier than the platform eliminated the fabric and briefly blocked her from the trending algorithm.

Some deepfakes additionally depicted Taylor Swift as a Trump supporter.

46. Jenna Ortega is the 2nd most searched deepfake superstar within the UK.

(Supply: SEMRush by way of Regulation Information)

Based mostly on UK search knowledge, behind Taylor Swift, actress Jenna Ortega is essentially the most searched superstar for grownup deepfakes.

She’s tied with influencer Brooke Monk and fellow actress Millie Bobby Brown.

The highest 20 listing is crammed with everybody from singer Billie Elish to streamer Pokimane, however all are girls.

47. Faux AI fashions make hundreds month-to-month on OnlyFans platforms.

(Supply: Supercreator, Forbes, MSN)

OnlyFans and comparable platforms are identified for his or her grownup content material however now AI means actual folks don’t have to reveal all of it for the digicam.

Personas like TheRRRealist and Aitana López make hundreds a month.

Whereas the previous is open about being faux, the latter has been coyer, opening moral questions. And so they aren’t the one ones.

Even AI influencers exterior the grownup house, resembling Olivia C (a two-person staff) make a residing by means of endorsements and adverts.

48. 1,500 AI fashions had a magnificence pageant.

(Supply: Wired)

The World AI Creator Awards (WAICA) launched this Summer season by way of AI influencer platform Fanvue. One facet was an AI magnificence pageant, which netted Kenza Layli (or her nameless creator) $10,000.

Over 1,500 AI creations, together with elaborate backstories and life, entered the competition. The Moroccan Kenza persona had a optimistic message of empowering girls and variety, however deepfake misuse isn’t all the time so “uplifting.”

49. 6,000 folks protested a widespread deepfake scandal focusing on lecturers and college students.

(Supply: BBC, The Guardian, Korea Occasions, CNN)

A collection of college deepfake scandals emerged in South Korea in 2024, the place a whole lot of hundreds of Telegram customers have been sharing NSFW deepfakes of feminine lecturers and college students.

Over 60 victims have been recognized, whose AI imagery had unfold onto public social media.

This led to six,000 folks protesting in Seoul and finally, 800 crimes have been recorded, a number of leading to convictions, and passing new legal guidelines.

They implicated round 200 faculties, with most victims and offenders being youngsters.

50. 4 million folks a month use deepfake ‘nudify’ apps on girls and youngsters.

(Supply: Wired – Nudify)

Past celebrities, deepfake AI misuse has a lot darker implications.

In 2020, an investigation of AI-powered apps that “undress” images of ladies and youngsters discovered 50 bots on Telegram for creating deepfakes with various sophistication. Two alone had greater than 400,000 customers.

51. Over 20,000 AI-generated CSAM have been posted to a single Darkish Net discussion board in a month.

(Supply: IWF)

Maybe essentially the most surprising of AI misuse statistics comes from a 2023 report from a safeguarding group. Its investigators found tens of hundreds of AI-generated CSAM posted to only one discussion board on the darkish internet over a month.

The report notes this has elevated in 2024 and such AI photographs have additionally elevated on the clear internet.

Cartoons, drawings, animations, and pseudo-photographs of this nature are unlawful within the UK.

Wrap Up

Regardless of the adoption of AI throughout many fields, it’s nonetheless very a lot the wild west. What does society deem acceptable, and will there be extra laws?

From college students producing assignments or dangerous use within the office to practical scams and unlawful deepfakes, AI has many regarding purposes.

As explored in these AI misuse statistics, it is perhaps time to rethink the place we would like this expertise to go.

Are you nervous about its misuse? Let me know within the feedback under!

Sources:

1. Turnitin

2. Tyton Companions

3. Heart for Democracy & Expertise

4. Oliver Wyman Discussion board

5. CNN World

6. Forrester Consulting by way of Workday

7. Ipos Shopper Tracker

8. ISE

9. Workable

10. E-book and Artist

11. Knowledge Privateness and Safety Insider

12. Reuters

13. Houston Regulation Overview

14. McAfee

15. Egress

16. Sosafe

17. SlashNext

18. SlashNext – WormGPT

19. KrebOnSecurity

20. Indiana College by way of the WSJ

21. WSJ

22. McAfee – India

23. Fortune

24. Sumsub

25. NewsGuard by way of the Washington Submit

26. Ipsos International Advisor

27. Misinfo Overview

28. Jumio

29. DeepMind

30. Heart for Democracy & Expertise

31. Channel 4 Information by way of The Guardian

32. Deeptrace

33. NBC Information

34. NBC Information 2

35. SEMRush by way of Regulation Information

36. Supercreator

37. Forbes

38. MSN

39. Wired

40. BBC

41. The Guardian

42. BBC 2

43. Korea Occasions

44. CNN

45. Wired – Nudify

46. IWF

/cdn.vox-cdn.com/uploads/chorus_asset/file/23324425/VRG_ILLO_5090_The_best_Fitbit_for_your_fitness_and_health.jpg)